Ethics in AI

4. The Dangers of Misinformation

Misinformation poses a significant threat to society, especially in an age where information spreads rapidly online. AI, with its ability to process vast amounts of data, can be a double-edged sword in this context—it can help combat misinformation, but it can also be used to spread it.

The Impact of Misclassification in AI

AI systems, particularly those used for content moderation, sentiment analysis, and recommendation engines, can sometimes misclassify information. Misclassifications can lead to severe societal outcomes, especially when dealing with misinformation.

False positives can result in harmful or misleading content being incorrectly flagged as trustworthy, allowing it to spread unchecked and influence public opinion.

False negatives in misinformation detection may allow false content to slip through the cracks, continuing to spread and potentially causing harm before it is identified and addressed.

AI and the Spread of Misinformation

AI can be used to both create and disseminate misinformation. Deepfake technology, which uses AI to create realistic but fake videos or images, is a prime example. These falsified media can be used to spread false information, manipulate public opinion, or discredit individuals or organizations.

Examples of Misinformation Spread by AI:

- Deepfakes: AI-generated videos that depict people saying or doing things they never actually did can be used to deceive the public or damage reputations.

- Bot Accounts: AI-driven bots on social media platforms can spread misinformation quickly and broadly, making it appear as though false information is widely accepted or endorsed.

- Fake News Generation: AI can generate realistic-looking news articles that contain false information, which can be difficult for the average person to distinguish from legitimate news sources.

Combating Misinformation with AI

While AI can contribute to the spread of misinformation, it can also be part of the solution. AI systems are being developed to detect and counteract misinformation by analyzing patterns, cross-referencing data, and flagging potentially false content.

Strategies to Combat Misinformation:

- AI-Powered Fact-Checking: Using AI to automatically check the accuracy of information by comparing it against reliable sources.

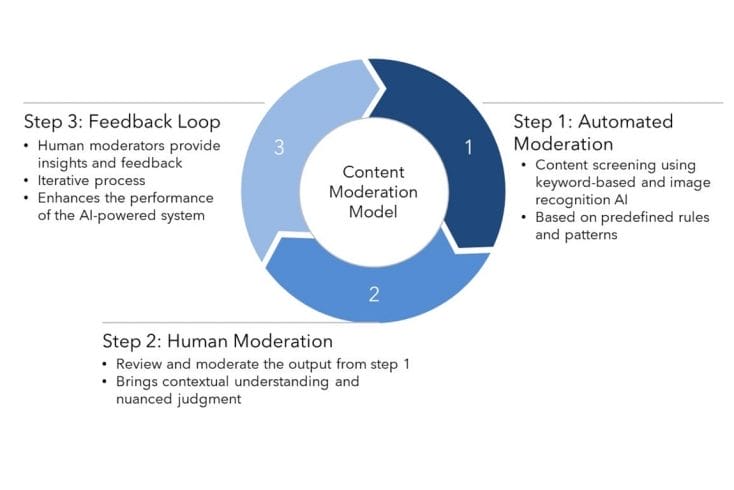

- Content Moderation: Employing AI to detect and remove harmful or false content from social media platforms.

Image Source: Avasant

Image Source: Avasant

Conclusion

Misinformation is a pervasive challenge in our digital world, with far-reaching consequences for society. While AI can contribute to the spread of false information, it also provides critical tools for combating this issue. By understanding and harnessing AI's potential, we can work towards a more informed and trustworthy information landscape, ensuring that truth prevails in an era of rapid information exchange.